Hands-on Scala Learning for Enterprise AI

Learn AI by Working With Our World-Class Advanced AI Computing Lab for Free.

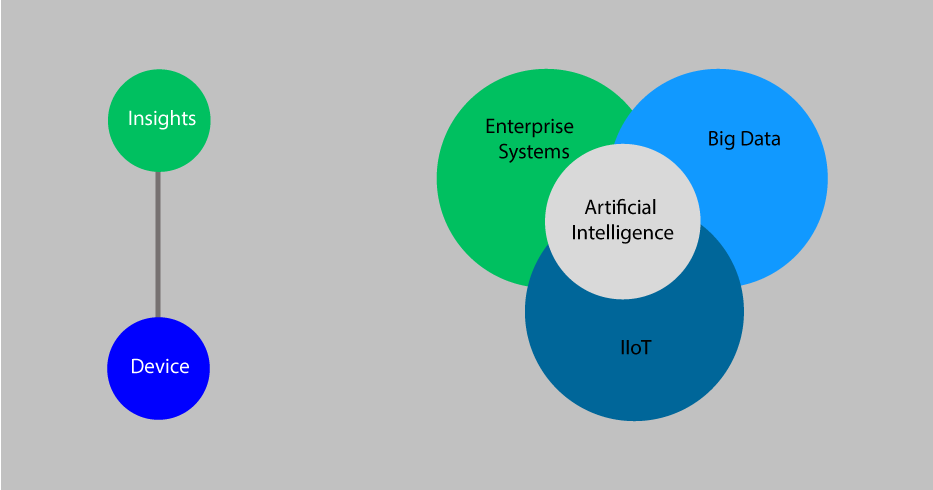

Reinvent traditional software engineering through our enterprise AI platform. Avoid instruction-driven hardcoded

automation and transform into the machine self-learning application for the digital era.

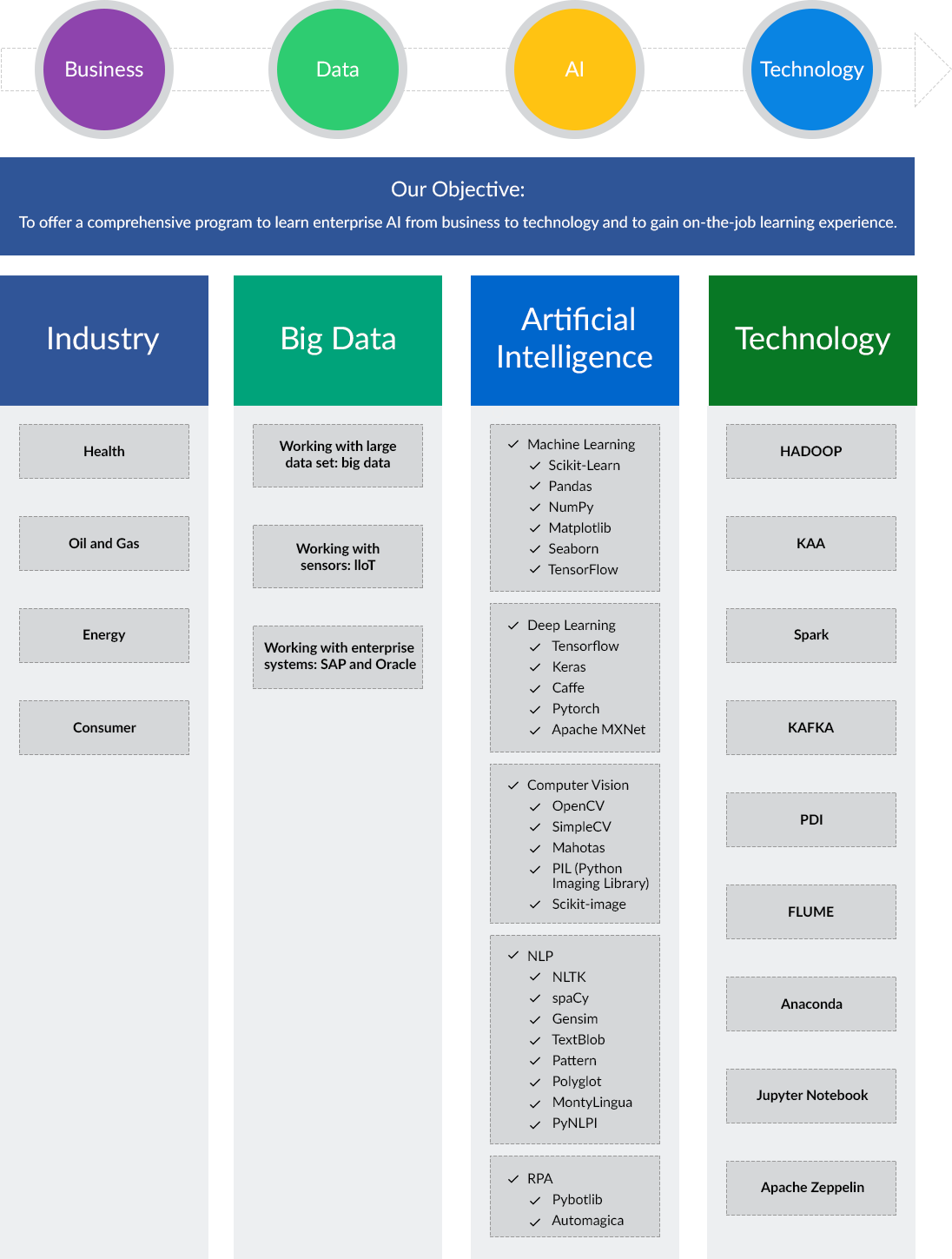

Be Empowered by Our School of Artificial Intelligence and Enabled Through Our Intelligent Automation

Learn Enterprise AI and Enterprise Data Science With Scala

Acquire Cross-Functional Skills from Business to Data to Technology by Mastering AI

Learning Path

Learn How Our School of AI Teaches Workers to Transfer Business Processes to the Modern Digital World

Choose Your Hands-On Learning Unit

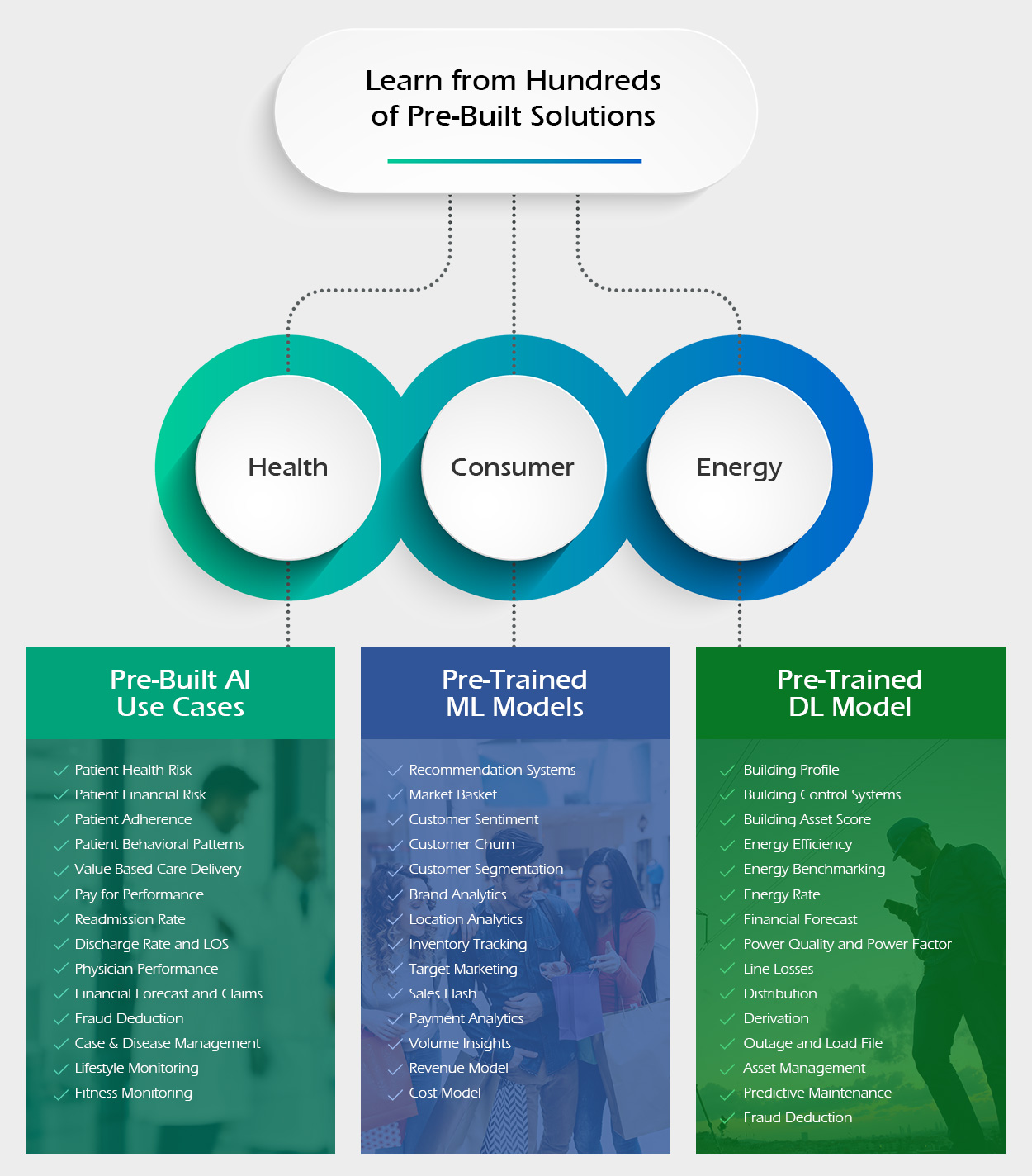

Develop an AI Application Using our Pre-Built Scala Libraries. Coding is No Longer Necessary

Master Artificial Intelligence with Hundreds of

Predeveloped Scala Programs

- Unit 1Scala Fundamentals

- Program #1Hellow World

- Program #2Variable

- Program #3Type Integer

- Program #4Type Floating Point

- Program #5Type String

- Program #6Complex Numbers

- Program #7Function Defining

- Program #8Dynamic Typing

- Program #9Static Typing

- Program #10Boolean Evaluation

- Program #11If Statement

- Program #12If Else Statement

- Program #13Else If Statement

- Program #14Block Structure

- Program #15Whitespaces

- Program #16Regular Expressions

- Program #17Lists

- Program #18Tuple

- Program #19Sets

- Program #20Frozen Sets

- Program #21Collection Transition

- Program #22Loop Else

- Program #23Arguments

- Program #24Mutable Arguments

- Program #25Accepting Variable Arguments

- Program #26Unpacking Argument List

- Program #27Scope

- Program #28Error Handling

- Program #29Namespaces

- Program #30File Input Output

- Program #31Higher Order Functions

- Program #32Anonymous Functions

- Program #33Nested Functions

- Program #34Closure

- Program #35Lexical Scoping

- Program #36Operator

- Program #37Decorators

- Program #38List Comprehensions

- Program #39Generator Expressions

- Program #40Generator Functions

- Program #41Itertools Chain

- Program #42Itertools Izip

- Program #43Debugging Tool Print

- Program #44Classes

- Program #45Emulation

- Program #46Class Method

- Program #47Static Method

- Program #48Inheritance

- Program #49Encapsulation

- Program #50N-Dimensional Array

- Program #51Reading Writing Data

- Program #52Reading Data From CSV

- Program #53Normalizing Data

- Program #54Formatting Data

- Program #55Controlling Line Properties Matplotlib

- Program #56Plotting Simple Function

- Program #57Importing Module

- Program #58Creating Module

- Program #59Graphing Matplotlib Using Defaults

- Program #60Graphing Matplotlib Using Defaults Changing Colors

- Program #61Graphing Matplotlib Using Defaults Setting Limits

- Program #62Graphing Matplotlib Using Defaults Setting Ticks

- Program #63Graphing Matplotlib Using Defaults Setting Tick Labels

- Program #64Graphing Matplotlib Using Defaults Moving Spines

- Program #65Graphing Matplotlib Using Defaults Adding Legends

- Program #66Graphing Matplotlib Using Defaults Annotating Points Legends

- Program #67Data Manipulation Using Pandas

- Program #68Reading Data From Hana To Python

- Program #69Reading Writing Data

- Program #70Reading Data From CSV

- Program #71Normalizing Data

- Program #72Formatting Data

- Program #73Controlling Line Properties Matplotlib

- Program #74Plotting Simple Function

- Program #75Importing Module

- Unit 2Big Data Processing

- Program #76Creating Module

- Program #77Graphing Matplotlib Using Defaults

- Program #78Graphing Matplotlib Using Defaults Changing Colors

- Program #79Graphing Matplotlib Using Defaults Setting Limits

- Program #80Isotonic Regression Scikit Learn

- Program #81Neural Networks Scikit Learn

- Program #82Non Linear Svm Scikit Learn

- Program #83Decision Trees Scikit Learn

- Program #84Plotting Validation Curve Scikit Learn

- Program #85Loading Datasets Scikit Learn

- Unit 3Machine Learning: Part 1

- Program #86Mean Shift Clustering Algorithm Scikit Learn

- Program #87Affinity Propagation Clustering Algorithm Scikit Learn

- Program #88Dbscan Clustering Algorithm Scikit Learn

- Program #89Kmeans Clustering Algorithm Scikit Learn

- Program #90Spectral Bi Clustering Algorithm Scikit Learn

- Program #91Spectral Co Clustering Algorithm Scikit Learn

- Program #92Ridge Regression Scikit Learn

- Program #93Scientific Analysis Arrays Numpy

- Program #94Scientific Analysis Arrays Reshaping Numpy

- Program #95Scientific Analysis Arrays Concatenating Numpy

- Program #96Scientific Analysis Arrays Adding New Dimensions Numpy

- Program #97Scientific Analysis Arrays Initializing With Zeros Ones Numpy

- Program #98Scientific Analysis Mgrid Scipy

- Program #99Scientific Analysis Polynomial Scipy

- Program #100Scientific Analysis Vectorizing Functions Scipy

- Unit 4Machine Learning: Part 2

- Program #101Scientific Analysis Select Function Scipy

- Program #102Scientific Analysis General Integration Scipy

- Program #103Time Series Analysis Pandas

- Program #104Exporting Data Using Pandas

- Program #105Importing Data Using Pandas

- Program #106Data Analysis Pandas

- Program #107Empty Graph Networkx

- Program #108Graph Adding Nodes Networkx

- Program #109Graph Adding Edges Networkx

- Program #110Graph Display Networkx

- Program #111Graph Path Networkx

- Program #112Renaming Nodes Networkx

- Program #113Example Pymc

- Program #114L1 Based Feature Selection

- Program #115Line Plot Matplotlib

- Program #116Dot Plot Matplotlib

- Unit 5Predictive Analytics

- Program #117Numeric Plot Matplotlib

- Program #118Figures Axes Matplotlib

- Program #1192D Plotting Mapplotlib

- Program #1202D Plot Scikit Learn

- Program #121Classification Scikit Learn

- Program #122Model Selection Scikit Learn

- Program #123Nearest Neighbours Regression Scikit Learn

- Program #124Graphing Matplotlib Regular Plot

- Program #125Graphing Matplotlib Scatter Plot

- Program #126Graphing Matplotlib Bar Plot

- Program #127Graphing Matplotlib Contour Plot

- Program #128Graphing Matplotlib Imshow

- Program #129Graphing Matplotlib Pie Chart

- Program #130Graphing Matplotlib Quiver Plot

- Program #131Graphing Matplotlib Grids

- Program #132Graphing Matplotlib Multi Plots

- Unit 6Advanced Machine Learning

- Program #133Graphing Matplotlib Polar Axis

- Program #134Graphing Matplotlib 3D Plot

- Program #135Graphing Matplotlib Texts

- Program #136Histogram Matplotlib

- Program #137Bed Occupancy Optimization

- Program #138Life Time Value Customer Prediction

- Program #139Customer Upselling Characteristics Prediction

- Program #140Sales Lead Priortization

- Program #141Inventory Demand Forecasting

- Program #142Credit Card Fraud Risk

- Program #143Employee Churn Prediction

- Program #144Patient Medication Complaince Prediction

- Program #145Physician Attrition Prediction

- Program #146Patient Readmittance Rate Prediction

- Unit 7Data Visualization

- Program #147Patient Insurance Claim Prediction

- Program #148Drug Demand Forecasting

- Program #149Customer Retention Analysis

- Program #150Hospital Bed Turn Analysis

- Program #151Patient Survival Analysis

- Program #152Patient Medication Effectiveness Analysis

- Program #153Sales Growth Analysis

- Program #154Customer Cross Selling Analysis

- Program #155Product Customer Segmentation

- Unit 8Use Case Implementation: Part 1

- Program #156Employee Talent Mangement

- Program #157Patient Bed Occupancy

- Program #158Product Market Basket Analysis

- Program #159Automobile Claims Handling Analysis

- Program #160Customer Market Share

- Program #161Data Collection From Excel

- Program #162Data Collection From CSV

- Program #163Data Collection From Clipboard

- Program #164Data Collection From HTML

- Program #165Data Collection From XML

- Unit 9Use Case Implementation: Part 2

- Program #166Data Collection From JSON

- Program #167Data Collection From PDF

- Program #168Data Collection From Plain Text

- Program #169Data Collection From DOCX

- Program #170Data Collection From HDF

- Program #171Data Collection From Image

- Program #172Data Collection From MP3

- Program #173Data Collection From SAP HANA

- Program #174Data Collection From Hadoop

- Program #175Data Integration Concatenate

- Program #176Data Integration Merge

- Program #177Data Integration Join

- Program #178Data Mapping Dictionary Literal Values

- Program #179Data Mapping Dictionary Operations

- Program #180Data Mapping Dictionary Comparision Operations

- Program #181Data Mapping Dictionary Statments

- Program #182Data Provisioning Extraction

- Program #183Data Provisioning Transformation

- Unit 10Advanced Data Processing

- Program #184Data Provisioning Loading

- Program #185Iterators

- Program #186Generator Expressions

- Program #187Generators

- Program #188Bidirectional Communication

- Program #189Chaining Generators

- Program #190Decorators

- Program #191Decorators As Functions

- Program #192Decorators As Classes

- Program #193Decorators Copying Docstring Other Attributes

- Program #194Example Standard Library

- Program #195Depriciation Of Functions

- Program #196While Loop Removing Decorator

- Program #197Plugin Registration System

- Program #198Context Managers

- Program #199Context Managers Catching Exceptions

- Unit 11Advanced Programming

- Program #200Context Managers Defining Using Generators

- Program #201Ndarray

- Program #202Ndarray Block Of Memory

- Program #203Ndarray Data Type

- Program #204Ndarray Indexing Scheme Strides

- Program #205Ndarray Slicing With Integers

- Program #206Ndarray Transposing With Integers

- Program #207Ndarray Reshaping Integers

- Program #208Ndarray Broadcasting

- Program #209Ndarray Universal Function

- Program #210Ndarray Generalized Universal Function

- Program #211Ndarray Old Buffer Protocol

- Program #212Processing Opening Writing To Image

- Program #213Image Processing Displaying Images

- Program #214Image Processing Displaying Images Basic Manipulation

- Program #215Image Processing Geometrical Transformations

- Program #216Image Processing Filtering Blurring

- Program #217Image Processing Filtering Sharpening

- Program #218Image Processing Filtering Denoising

- Program #219Image Processing Filtering Apply Gaussian Filter

- Program #220Image Processing Filtering Apply Median Filter

- Program #221Image Processing Feature Extraction Edge Detection

- Program #222Image Processing Feature Extraction Segmentation

- Program #223Tensorflow Hello World

- Program #224Tensorflow Tensors

- Program #225Tensorflow Fixed Tensors

- Program #226Tensorflow Sequence Tensors

- Program #227Tensorflow Randon Tensors

- Program #228Tensorflow Constants

- Program #229Tensorflow Variables

- Program #230Tensorflow Placeholders

- Program #231Tensorflow Graphs

- Program #232Tensorflow Session

- Program #233Tensorflow Feed Dictionary

- Program #234Tensorflow Data Type

- Program #235Tensorflow Add Two Consatnts

- Program #236Tensorflow Multiply Two Consatnts

- Program #237Tensorflow Matrix Inverse Method

- Program #238Tensorflow Queues

- Program #239Tensorflow Saving Variables

- Program #240Tensorflow Restoring Variables

- Program #241Tensorflow Tensorboard

- Program #242Tensorflow Namescope

- Program #243Tensorflow Linear Regression

- Program #244Tensorflow Logistic Regression

- Program #245Tensorflow Random Forest

- Program #246Tensorflow Kmeans Clustering

- Unit 12Working with Tensorflow

- Program #247Tensorflow Linear Support Vector Machine

- Program #248Tensorflow Non Linear Support Vector Machine

- Program #249Tensorflow Multi Class Support Vector Machine

- Program #250Tensorflow Nearest Neighbours

- Program #251Tensorflow Neural Networks

- Program #252Tensorflow Convolutional Neural Networks

- Program #253Tensorflow Deep Neural Networks

- Program #254NLP Installing Nltk

- Program #255NLP Count Word Frequency Nltk

- Program #256NLP Remove Stop Words

- Program #257NLP Tokenize Text Nltk

- Program #258NLP Tokenize Non English Text Nltk

- Program #259NLP Get Synonyms Nltk

- Program #260NLP Get Antonyms Nltk

- Unit 13Working with NLP

- Program #261NLP Word Stemming Nltk

- Program #262NLP Non English Word Stemming Nltk

- Program #263NLP Lemmatizing Words Nltk

- Program #264NLP Part Of Speech Tagging Nltk

- Program #265NLP Chinking Nltk

- Program #266NLP Chunking Nltk

- Program #267NLP Corpora Nltk

- Program #268NLP Named Entity Recognition Nltk

- Program #269NLP Text Classification Nltk

- Unit 14Working with Computer Vision

- Program #270NLP Converting Words To Features Nltk

- Program #271NLP Naive Bayes Classifier Nltk

- Program #272NLP Save Classifier Nltk

- Program #273NLP Scikit Learn Algorithms Nltk

- Program #274NLP Combining Algorithms Nltk

- Program #275NLP Noise Removal Nltk

- Program #276NLP Noise Removal Regular Expressions Nltk

- Program #277NLP Object Standardization Nltk

- Program #278NLP Topic Modelling Nltk

- Program #279NLP Ngrams Nltk

- Program #280NLP Tfidf Vectorizer_nltk

- Program #281NLP Word Embedding Nltk

- Program #282NLP Text Matching Levenshtein Distance Nltk

- Program #283NLP Cosine Similarity Nltk

- Program #284NLP Wordnet Nltk

- Unit 15Working with RPA

- Program #285Computer Vision Install Opencv

- Program #286Computer Vision Reading Images Opencv

- Program #287Computer Vision Displaying Images Opencv

- Program #288Computer Vision Writing Images Opencv

- Program #289Computer Vision Color Space Opencv

- Program #290Computer Vision Thresholding Opencv

- Program #291Computer Vision Finding Contours Opencv

- Program #292Computer Vision Image Scaling Opencv

- Program #293Computer Vision Image Rotation Opencv

- Program #294Computer Vision Image Translation Opencv

- Program #295Computer Vision Image Edge Detection Opencv

- Program #296Computer Vision Image Filtering Opencv

- Program #297Computer Vision Image Filtering Blurring Opencv

- Program #298Computer Vision Image Filtering Blurring Gaussian Blur Opencv

- Unit 16Working with Deep Learning

- Program #299Computer Vision Image Filtering Blurring Median Blur Opencv

- Program #300Computer Vision Image Filtering Bilateral Opencv

- Program #301Computer Vision Morphological Operations Erosion Opencv

- Program #302Computer Vision Morphological Operations Dilation Opencv

- Program #303Computer Vision Morphological Operations Opening Opencv

/*****************************

File Name : CSLAB_HELLO_WORLD_V1

Purpose : A Program for Hello World in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 09:04 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Hello World in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object HelloWorld {

def main(args: Array[String]): Unit = {

println("Hello, world!")

}

}

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_VARIABLES_V1

Purpose : A Program for Variables in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 9:15 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Variables in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

var vAR_CSLAB_myVariable : Int = 0;

val vAR_CSLAB_myValue : Int = 1;

vAR_CSLAB_myValue

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_VARIABLE_MULTIPLE_ASSIGNMENTS_V1

Purpose : A Program for Variables in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 9:28 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Variables in R

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

var vAR_CSLAB_myVariable = 10;

val vAR_CSLAB_myValue = "Hello, Scala!";

val (vAR_CSLAB_myVariable1: Int, vAR_CSLAB_myVariable2: String) = Pair(40, "Foo")

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_DATATYPE_INTEGER_V1

Purpose : A Program for Integer Datatype in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 9:37 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Integer Datatype in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

var vAR_CSLAB_a : Int = 12;

vAR_CSLAB_a + 30

val vAR_CSLAB_b : Int = 50;

vAR_CSLAB_6 + 30

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_DATATYPE_FLOAT_V1

Purpose : A Program for Float Datatype in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 9:42 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Float Datatype in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

var vAR_CSLAB_c = 12.3f;

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_DATATYPE_DOUBLE_V1

Purpose : A Program for Double Datatype in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 9:47 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Double Datatype in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

var vAR_CSLAB_c = 12.3;

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_ACCESS_MODIFIER_PRIVATE_MEMBER_V1

Purpose : A Program for Access Modifier - Private Members in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 9:54 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Access Modifier - Private Members in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

class Example {

private var vAR_CSLAB_a:Int=7

def show(){

vAR_CSLAB_a=8

println(vAR_CSLAB_a)

}

}

object access extends App{

var vAR_CSLAB_e=new Example()

vAR_CSLAB_e.show()

//e.a=8

//println(e.a)

}

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_ACCESS_MODIFIER_PROTECTED_MEMBER_V1

Purpose : A Program for Access Modifier - Protected Members in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 10:09 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Access Modifier - Protected Members in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

class Example1{

protected var vAR_CSLAB_a:Int=7

def show(){

vAR_CSLAB_a=8

println(vAR_CSLAB_a)

}

}

class Example2 extends Example1{

def show1(){

vAR_CSLAB_a=9

println(vAR_CSLAB_a)

}

}

object access1 extends App{

var vAR_CSLAB_e=new Example()

vAR_CSLAB_e.show()

var vAR_CSLAB_e2=new Example1()

//vAR_CSLAB_e.vAR_CSLAB_a=10

//println(vAR_CSLAB_e.vAR_CSLAB_a)

}

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_ACCESS_MODIFIER_PUBLIC_MEMBER_V1

Purpose : A Program for Access Modifier - Public Members in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 10:28 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Access Modifier - Public Members in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

class Example3{

protected var vAR_CSLAB_a:Int=7

def show(){

println(vAR_CSLAB_a)

}

}

class Example4 extends Example3 {

def show1(){

vAR_CSLAB_a=9

println(vAR_CSLAB_a)

}

}

object access2 extends App{

var vAR_CSLAB_e=new Example2()

vAR_CSLAB_e.show()

var vAR_CSLAB_e1=new Example3()

//vAR_CSLAB_e1.show1()

//e.a=10

//println(e.a)

vAR_CSLAB_e1.show()

}

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_STRING_INTERPOLATION_V1

Purpose : A Program for String Interpolation in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 10:39 hrs

Version : 1.0

/*****************************

## Program Description : A Program for String Interpolation in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object HelloWorld{

def main(args: Array[String]) {

val vAR_CSLAB_name = "mark"

val vAR_CSLAB_age = 18

println(vAR_CSLAB_name + " is "+ vAR_CSLAB_age + " years old" )

println()

println(s"$vAR_CSLAB_name is $vAR_CSLAB_age years old")

println(f"$vAR_CSLAB_name%s is $vAR_CSLAB_age%d years old")

}

}

HelloWorld.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_S_STRING_INTERPOLATION_V1

Purpose : A Program for "S" String Interpolation in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 10:44 hrs

Version : 1.0

/*****************************

## Program Description : A Program for "S" String Interpolation in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object HelloWorld{

def main(args: Array[String]) {

val vAR_CSLAB_name = "mark"

val vAR_CSLAB_age = 18

println(vAR_CSLAB_name + " is "+ vAR_CSLAB_age + " years old" )

println()

println(s"$vAR_CSLAB_name is $vAR_CSLAB_age years old")

}

}

HelloWorld.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_F_STRING_INTERPOLATION_V1

Purpose : A Program for "F" String Interpolation in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 10:49 hrs

Version : 1.0

/*****************************

## Program Description : A Program for "F" String Interpolation in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object HelloWorld{

def main(args: Array[String]) {

val vAR_CSLAB_name = "mark"

val vAR_CSLAB_age = 18

println(vAR_CSLAB_name + " is "+ vAR_CSLAB_age + " years old" )

println()

println(f"$vAR_CSLAB_name%s is $vAR_CSLAB_age%d years old")

}

}

HelloWorld.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_IF_STATEMENT_V1

Purpose : A Program for if Statements in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 11:04 hrs

Version : 1.0

/*****************************

## Program Description : A Program for if Statements in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object IF_STATEMENT {

def main(args: Array[String]) {

var vAR_CSLAB_x = 10;

if( vAR_CSLAB_x < 20 ){

println("This is if statement");

}

}

}

IF_STATEMENT.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_IF_ELSE_STATEMENT_V1

Purpose : A Program for if Else Statements in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 11:10 hrs

Version : 1.0

/*****************************

## Program Description : A Program for if Else Statements in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object IF_ELSE_STATEMENT {

def main(args: Array[String]) {

var vAR_CSLAB_x = 30;

if( vAR_CSLAB_x < 20 ){

println("This is if statement");

}else{

println("This is else statement");

}

}

}

IF_ELSE_STATEMENT.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_ELSE_STATEMENT_V1

Purpose : A Program for Else Statements in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 11:24 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Else Statements in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object ELSE_STATEMENT {

def main(args: Array[String]) {

var vAR_CSLAB_x = 30;

if( vAR_CSLAB_x == 10 ){

println("Value of vAR_CSLAB_X is 10");

}else if( vAR_CSLAB_x == 20 ){

println("Value of vAR_CSLAB_X is 20");

}else if( vAR_CSLAB_x == 30 ){

println("Value of vAR_CSLAB_X is 30");

}else{

println("This is else statement");

}

}

}

ELSE_STATEMENT.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_NESTED_IF_STATEMENT_V1

Purpose : A Program for Nested if Statements in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 11:33 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Nested if Statements in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object NESTED_IF_STATEMENT {

def main(args: Array[String]) {

var vAR_CSLAB_x = 30;

var vAR_CSLAB_y = 10;

if( vAR_CSLAB_x == 30 ){

if( vAR_CSLAB_y == 10 ){

println("vAR_CSLAB_X = 30 and vAR_CSLAB_Y = 10");

}

}

}

}

NESTED_IF_STATEMENT.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_BREAK_STATEMENT_V1

Purpose : A Program for Break Statement in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 11:45 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Break Statement in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

import scala.util.control._

object BREAK_STATEMENT {

def main(args: Array[String]) {

var a = 0;

val numList = List(1,2,3,4,5,6,7,8,9,10);

val loop = new Breaks;

loop.breakable {

for( a <- numList){

println( "Value of a: " + a );

if( a == 4 ){

loop.break;

}

}

}

println( "After the loop" );

}

}

BREAK_STATEMENT.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_BREAKING_NESTED_LOOPS_V1

Purpose : A Program for Breaking Nested Loops in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 12:01 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Breaking Nested Loops in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

import scala.util.control._

object BREAKING_NESTED_LOOP {

def main(args: Array[String]) {

var vAR_CSLAB_a = 0;

var vAR_CSLAB_b = 0;

val vAR_CSLAB_numList1 = List(1,2,3,4,5);

val vAR_CSLAB_numList2 = List(11,12,13);

val vAR_CSLAB_outer = new Breaks;

val vAR_CSLAB_inner = new Breaks;

vAR_CSLAB_outer.breakable {

for(vAR_CSLAB_a <- vAR_CSLAB_numList1){

println( "Value of vAR_CSLAB_a: " + vAR_CSLAB_a );

vAR_CSLAB_inner.breakable {

for(vAR_CSLAB_b <- vAR_CSLAB_numList2){

println( "Value of vAR_CSLAB_b: " + vAR_CSLAB_b );

if( vAR_CSLAB_b == 12 ){

vAR_CSLAB_inner.break;

}

}

} // inner breakable

}

} // outer breakable.

}

}

BREAKING_NESTED_LOOP.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_WHILE_LOOP_V1

Purpose : A Program for While Loop in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 12:14 hrs

Version : 1.0

/*****************************

## Program Description : A Program for While Loop in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object WHILE_LOOP {

def main(args: Array[String]) {

// Local variable declaration:

var vAR_CSLAB_a = 10;

// while loop execution

while( vAR_CSLAB_a < 20 ){

println( "Value of vAR_CSLAB_a: " + vAR_CSLAB_a );

vAR_CSLAB_a = vAR_CSLAB_a + 1;

}

}

}

WHILE_LOOP.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_DO_WHILE_LOOP_V1

Purpose : A Program for Do While Loop in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 12:28 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Do While Loop in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object DO_WHILE_LOOP {

def main(args: Array[String]) {

// Local variable declaration:

var vAR_CSLAB_a = 10;

// do loop execution

do {

println( "Value of vAR_CSLAB_a: " + vAR_CSLAB_a );

vAR_CSLAB_a = vAR_CSLAB_a + 1;

}

while( vAR_CSLAB_a < 20 )

}

}

DO_WHILE_LOOP.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_FOR_LOOP_V1

Purpose : A Program FOR Loop in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 12:41 hrs

Version : 1.0

/*****************************

## Program Description : A Program FOR Loop in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object FOR_LOOP {

def main(args: Array[String]) {

var vAR_CSLAB_a = 0;

// for loop execution with a range

for( vAR_CSLAB_a <- 1 to 10){

println( "Value of vAR_CSLAB_a: " + vAR_CSLAB_a );

}

}

}

FOR_LOOP.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_ADD_INTEGERS_V1

Purpose : A Program for Adding Integers in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 12:50 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Adding Integers in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object add{

def FUNCTION_ADD_INTERGERS ( vAR_CSLAB_a:Int, vAR_CSLAB_b:Int ) : Int = {

var vAR_CSLAB_sum:Int = 0

vAR_CSLAB_sum = vAR_CSLAB_a + vAR_CSLAB_b

return vAR_CSLAB_sum

}

}

add.FUNCTION_ADD_INTERGERS(5, 6)

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_CALLING_FUNCTIONS_V1

Purpose : A Program for Calling Functions in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 13:42 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Calling Functions in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object add{

def FUNCTION_ADD_INTERGERS ( vAR_CSLAB_a:Int, vAR_CSLAB_b:Int ) : Int = {

var vAR_CSLAB_sum:Int = 0

vAR_CSLAB_sum = vAR_CSLAB_a + vAR_CSLAB_b

return vAR_CSLAB_sum

}

}

add.FUNCTION_ADD_INTERGERS(5,6)

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_FUNCTIONS_CALL_BY_NAME_V1

Purpose : A Program for Functions Call by Name in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 14:01 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Functions Call by Name in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def FUNCTION_CALL_BY_NAME (args: Array[String]) {

delayed(time());

}

def time() = {

println("Getting time in nano seconds")

System.nanoTime

}

def delayed( t: => Long ) = {

println("In delayed method")

println("Param: " + t)

}

}

Demo.FUNCTION_CALL_BY_NAME(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_FUNCTIONS_WITH_VARIABLE_ARGUMENTS_V1

Purpose : A Program for Functions with Variable Arguments in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 14:17 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Functions with Variable Arguments in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def FUNCTIONS_VARIABLE_ARGUMENTS (args: Array[String]) {

printStrings("Hello", "Scala", "Python");

}

def printStrings( args:String* ) = {

var vAR_CSLAB_i : Int = 0;

for( arg <- args ){

println("Arg value[" + vAR_CSLAB_i + "] = " + arg );

vAR_CSLAB_i = vAR_CSLAB_i + 1;

}

}

}

Demo.FUNCTIONS_VARIABLE_ARGUMENTS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_DEFAULT_PARAMETER_VALUE_FOR_A_FUNCTION_V1

Purpose : A Program for Default Parameter Value for a Function in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 14:32 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Default Parameter Value for a Function in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def main(args: Array[String]) {

println( "Returned Value : " + FUNCTION_ADD_INTERGERS() );

}

def FUNCTION_ADD_INTERGERS ( vAR_CSLAB_a:Int = 5, vAR_CSLAB_b:Int = 7 ) : Int = {

var vAR_CSLAB_sum:Int = 0

vAR_CSLAB_sum = vAR_CSLAB_a + vAR_CSLAB_b

return vAR_CSLAB_sum

}

}

Demo.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_NESTED_FUNCTIONS_V1

Purpose : A Program Nested Functions in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 14:48 hrs

Version : 1.0

/*****************************

## Program Description : A Program Nested Functions in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def main(args: Array[String]) {

println( FUNCTION_FACTORIAL(0) )

println( FUNCTION_FACTORIAL(1) )

println( FUNCTION_FACTORIAL(2) )

println( FUNCTION_FACTORIAL(3) )

}

def FUNCTION_FACTORIAL (vAR_CSLAB_i: Int): Int = {

def fact(vAR_CSLAB_i: Int, vAR_CSLAB_accumulator: Int): Int = {

if (vAR_CSLAB_i <= 1)

vAR_CSLAB_accumulator

else

fact(vAR_CSLAB_i - 1, vAR_CSLAB_i * vAR_CSLAB_accumulator)

}

fact(vAR_CSLAB_i, 1)

}

}

Demo.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_PARTIALLY_APPLIED_FUNCTIONS_V1

Purpose : A Program for Partially Applied Functions in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 15:03 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Partially Applied Functions in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

import java.util.Date

object Demo {

def PARTIAL_FUNCTIONS (args: Array[String]) {

val vAR_CSLAB_date = new Date

log(vAR_CSLAB_date, "message1" )

Thread.sleep(1000)

log(vAR_CSLAB_date, "message2" )

Thread.sleep(1000)

log(vAR_CSLAB_date, "message3" )

}

def log(vAR_CSLAB_date: Date, vAR_CSLAB_message: String) = {

println(vAR_CSLAB_date + "----" + vAR_CSLAB_message)

}

}

Demo.PARTIAL_FUNCTIONS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_FOR_LOOP_V1

Purpose : A Program FOR Loop in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 12:41 hrs

Version : 1.0

/*****************************

## Program Description : A Program FOR Loop in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object FOR_LOOP {

def main(args: Array[String]) {

var vAR_CSLAB_a = 0;

// for loop execution with a range

for( vAR_CSLAB_a <- 1 to 10){

println( "Value of vAR_CSLAB_a: " + vAR_CSLAB_a );

}

}

}

FOR_LOOP.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_RECURSION_FUNCTION_V1

Purpose : A Program for Recursion Function in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 15:39 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Recursion Function in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def RECURSION_FUNCTION (args: Array[String]) {

for (vAR_CSLAB_i <- 1 to 10)

println( "Factorial of " + vAR_CSLAB_i + ": = " + factorial(vAR_CSLAB_i) )

}

def factorial(vAR_CSLAB_n: BigInt): BigInt = {

if (vAR_CSLAB_n <= 1)

1

else

vAR_CSLAB_n * factorial(vAR_CSLAB_n - 1)

}

}

Demo.RECURSION_FUNCTION(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_HIGHER_ORDER_FUNCTIONS_V1

Purpose : A Program for Higher Order Functions in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 15:53 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Higher Order Functions in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def HIGHER_ORDER_FUNCTION (args: Array[String]) {

println( apply( layout, 10) )

}

def apply(f: Int => String, v: Int) = f(v)

def layout[A](x: A) = "[" + x.toString() + "]"

}

Demo.HIGHER_ORDER_FUNCTION(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_ANONYMOUS_FUNCTIONS_V1

Purpose : A Program for Anonymous Functions in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 16:07 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Anonymous Functions in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

var inc = (vAR_CSLAB_x:Int) => vAR_CSLAB_x+1

//var vAR_CSLAB_x = inc(7)

//var vAR_CSLAB_x = inc(7)

var mul = (vAR_CSLAB_x: Int, vAR_CSLAB_y: Int) => vAR_CSLAB_x*vAR_CSLAB_y

println(mul(3, 4))

var userDir = () => { System.getProperty("user.dir") }

println( userDir )

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_CURRYING_FUNCTIONS_V1

Purpose : A Program for Currying Functions in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 16:23 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Currying Functions in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def CURRYING_FUNCTIONS (args: Array[String]) {

val vAR_CSLAB_str1:String = "Hello, "

val vAR_CSLAB_str2:String = "Scala!"

println( "vAR_CSLAB_str1 + vAR_CSLAB_str2 = " + strcat(vAR_CSLAB_str1)(vAR_CSLAB_str2) )

}

def strcat(vAR_CSLAB_s1: String)(vAR_CSLAB_s2: String) = {

vAR_CSLAB_s1 + vAR_CSLAB_s2

}

}

Demo.CURRYING_FUNCTIONS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_CLOSURE_V1

Purpose : A Program for Closure in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 16:38 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Currying Functions in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def CLOSURE (args: Array[String]) {

println( "vAR_CSLAB_multiplier(1) value = " + vAR_CSLAB_multiplier(1) )

println( "vAR_CSLAB_multiplier(2) value = " + vAR_CSLAB_multiplier(2) )

}

var vAR_CSLAB_factor = 3

val vAR_CSLAB_multiplier = (vAR_CSLAB_i:Int) => vAR_CSLAB_i * vAR_CSLAB_factor

}

Demo.CLOSURE(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_CREATING_A_STRING_V1

Purpose : A Program for Creation of Strings in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 16:54 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Creation of Strings in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

val vAR_CSLAB_greeting: String = "Hello, world!"

def main(args: Array[String]) {

println( vAR_CSLAB_greeting )

}

}

Demo.main(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_CONCATENATING_STRINGS_V1

Purpose : A Program for Concatenating Strings in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 17:08 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Creation of Strings in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def CONCATENATING_STRINGS (args: Array[String]) {

var vAR_CSLAB_str1 = "Dot saw I was ";

var vAR_CSLAB_str2 = "Tod";

println("Dot " + vAR_CSLAB_str1 + vAR_CSLAB_str2);

}

}

Demo.CONCATENATING_STRINGS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_CREATING_FORMAT_STRINGS_V1

Purpose : A Program for Creating Format Strings in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 17:24 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Creating Format Strings in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def CREATING_FORMAT_STRINGS (args: Array[String]) {

var vAR_CSLAB_floatVar = 12.456

var vAR_CSLAB_intVar = 2000

var vAR_CSLAB_stringVar = "Hello, Scala!"

var vAR_CSLAB_fs = printf("The value of the float variable is " + "%f, while the value of the integer " + "variable is %d, and the string " + "is %s", vAR_CSLAB_floatVar, vAR_CSLAB_intVar, vAR_CSLAB_stringVar);

println(vAR_CSLAB_fs)

}

}

Demo.CREATING_FORMAT_STRINGS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_DECLARING_ARRAY_VARIABLES_V1

Purpose : A Program for Declaring Array Variables in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 17:43 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Declaring Array Variables in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def CREATING_FORMAT_STRINGS (args: Array[String]) {

var vAR_CSLAB_z = Array("Zara", "Nuha", "Ayan")

}

}

Demo.CREATING_FORMAT_STRINGS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_PROCESSING_ARRAYS_V1

Purpose : A Program for Processing Arrays in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 30/01/2019 18:07 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Processing Arrays in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def PROCESSING_ARRAYS (args: Array[String]) {

var vAR_CSLAB_myList = Array(1.9, 2.9, 3.4, 3.5)

// Print all the array elements

for ( vAR_CSLAB_x <- vAR_CSLAB_myList ) {

println( vAR_CSLAB_x )

}

// Summing all elements

var vAR_CSLAB_total = 0.0;

for ( vAR_CSLAB_i <- 0 to (vAR_CSLAB_myList.length - 1)) {

vAR_CSLAB_total += vAR_CSLAB_myList(vAR_CSLAB_i);

}

println("Total is " + vAR_CSLAB_total);

// Finding the largest element

var vAR_CSLAB_max = vAR_CSLAB_myList(0);

for ( vAR_CSLAB_i <- 1 to (vAR_CSLAB_myList.length - 1) ) {

if (vAR_CSLAB_myList(vAR_CSLAB_i) > vAR_CSLAB_max) vAR_CSLAB_max = vAR_CSLAB_myList(vAR_CSLAB_i);

}

println("Max is " + vAR_CSLAB_max);

}

}

Demo.PROCESSING_ARRAYS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_MULTI_DIMENSIONAL_ARRAYS_V1

Purpose : A Program for Multi-Dimensional Arrays in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 9:47 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Multi-Dimensional Arrays in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

import Array._

object Demo {

def MULTI_DIMENSIONAL_ARRAYS (args: Array[String]) {

var vAR_CSLAB_myMatrix = ofDim[Int](3,3)

// build a matrix

for (vAR_CSLAB_i <- 0 to 2) {

for ( vAR_CSLAB_j <- 0 to 2) {

vAR_CSLAB_myMatrix(vAR_CSLAB_i)(vAR_CSLAB_j) = vAR_CSLAB_j;

}

}

// Print two dimensional array

for (vAR_CSLAB_i <- 0 to 2) {

for ( vAR_CSLAB_j <- 0 to 2) {

print(" " + vAR_CSLAB_myMatrix(vAR_CSLAB_i)(vAR_CSLAB_j));

}

println();

}

}

}

Demo.MULTI_DIMENSIONAL_ARRAYS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_CONCATENATIONG_ARRAYS_V1

Purpose : A Program for Concatenating Arrays in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 10:12 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Concatenating Arrays in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

import Array._

object Demo {

def CONCATENATING_ARRAYS (args: Array[String]) {

var vAR_CSLAB_myList1 = Array(1.9, 2.9, 3.4, 3.5)

var vAR_CSLAB_myList2 = Array(8.9, 7.9, 0.4, 1.5)

var vAR_CSLAB_myList3 = concat( vAR_CSLAB_myList1, vAR_CSLAB_myList2)

// Print all the array elements

for ( vAR_CSLAB_x <- vAR_CSLAB_myList3 ) {

println( vAR_CSLAB_x )

}

}

}

Demo.CONCATENATING_ARRAYS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_CREATING_ARRAYS_WITH_RANGE_V1

Purpose : A Program for Creating Arrays With Range in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 10:25 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Creating Arrays With Range in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

import Array._

object Demo {

def ARRAYS_WITH_RANGE (args: Array[String]) {

var vAR_CSLAB_myList1 = range(10, 20, 2)

var vAR_CSLAB_myList2 = range(10,20)

// Print all the array elements

for ( vAR_CSLAB_x <- vAR_CSLAB_myList1 ) {

print( " " + vAR_CSLAB_x )

}

println()

for ( vAR_CSLAB_x <- vAR_CSLAB_myList2 ) {

print( " " + vAR_CSLAB_x )

}

}

}

Demo.ARRAYS_WITH_RANGE(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_COLLECTIONS_V1

Purpose : A Program for Collections in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 10:42 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Collections in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

// Define List of integers.

val vAR_CSLAB_x = List(1,2,3,4)

// Define a set.

var vAR_CSLAB_x1 = Set(1,3,5,7)

// Define a map.

val vAR_CSLAB_x2 = Map("one" -> 1, "two" -> 2, "three" -> 3)

// Create a tuple of two elements.

val vAR_CSLAB_x3 = (10, "Scala")

// Define an option

val vAR_CSLAB_x4:Option[Int] = Some(5)

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_LISTS_V1

Purpose : A Program for Lists in Collections in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 11:02 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Lists in Collections in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def LISTS (args: Array[String]) {

val vAR_CSLAB_fruit = "apples" :: ("oranges" :: ("pears" :: Nil))

val vAR_CSLAB_nums = Nil

println( "Head of fruit : " + vAR_CSLAB_fruit.head )

println( "Tail of fruit : " + vAR_CSLAB_fruit.tail )

println( "Check if fruit is empty : " + vAR_CSLAB_fruit.isEmpty )

println( "Check if nums is empty : " + vAR_CSLAB_nums.isEmpty )

}

}

Demo.LISTS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_COLLECTIONS_CONCATENATING_LISTS_V1

Purpose : A Program for Concatenating Lists in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 11:18 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Concatenating Lists in Collections in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def CONCATENATING_LISTS (args: Array[String]) {

val vAR_CSLAB_fruit1 = "apples" :: ("oranges" :: ("pears" :: Nil))

val vAR_CSLAB_fruit2 = "mangoes" :: ("banana" :: Nil)

// use two or more lists with ::: operator

var vAR_CSLAB_fruit = vAR_CSLAB_fruit1 ::: vAR_CSLAB_fruit2

println( "vAR_CSLAB_fruit1 ::: vAR_CSLAB_fruit2 : " + vAR_CSLAB_fruit )

// use two lists with Set.:::() method

vAR_CSLAB_fruit = vAR_CSLAB_fruit1.:::(vAR_CSLAB_fruit2)

println( "vAR_CSLAB_fruit1.:::(vAR_CSLAB_fruit2) : " + vAR_CSLAB_fruit )

// pass two or more lists as arguments

vAR_CSLAB_fruit = List.concat(vAR_CSLAB_fruit1, vAR_CSLAB_fruit2)

println( "List.concat(vAR_CSLAB_fruit1, vAR_CSLAB_fruit2) : " + vAR_CSLAB_fruit)

}

}

Demo.CONCATENATING_LISTS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_COLLECTIONS_UNIFORM_LISTS_V1

Purpose : A Program for Uniform Lists in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 11:34 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Uniform Lists in Collections in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def UNIFORM_LISTS (args: Array[String]) {

val vAR_CSLAB_fruit = List.fill(3)("apples") // Repeats apples three times.

println( "vAR_CSLAB_fruit : " + vAR_CSLAB_fruit )

val vAR_CSLAB_num = List.fill(10)(2) // Repeats 2, 10 times.

println( "num : " + vAR_CSLAB_num )

}

}

Demo.UNIFORM_LISTS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_SETS_V1

Purpose : A Program for Sets in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 11:51 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Sets in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def SETS (args: Array[String]) {

val vAR_CSLAB_fruit = Set("apples", "oranges", "pears")

val vAR_CSLAB_nums: Set[Int] = Set()

println( "Head of fruit : " + vAR_CSLAB_fruit.head )

println( "Tail of fruit : " + vAR_CSLAB_fruit.tail )

println( "Check if fruit is empty : " + vAR_CSLAB_fruit.isEmpty )

println( "Check if nums is empty : " + vAR_CSLAB_nums.isEmpty )

}

}

Demo.SETS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_CONCATENATING_SETS_V1

Purpose : A Program for Concatenating Sets in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 12:07 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Concatenating Sets in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def CONCATENATING_SETS (args: Array[String]) {

val vAR_CSLAB_fruit1 = Set("apples", "oranges", "pears")

val vAR_CSLAB_fruit2 = Set("mangoes", "banana")

// use two or more sets with ++ as operator

var vAR_CSLAB_fruit = vAR_CSLAB_fruit1 ++ vAR_CSLAB_fruit2

println( "vAR_CSLAB_fruit1 ++ vAR_CSLAB_fruit2 : " + vAR_CSLAB_fruit )

// use two sets with ++ as method

vAR_CSLAB_fruit = vAR_CSLAB_fruit1.++(vAR_CSLAB_fruit2)

println( "vAR_CSLAB_fruit1.++(vAR_CSLAB_fruit2) : " + vAR_CSLAB_fruit )

}

}

Demo.CONCATENATING_SETS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_ELEMENTS_IN_SETS_V1

Purpose : A Program for Elements in Sets in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 12:23 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Elements in Sets in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def ELEMENTS_IN_SETS (args: Array[String]) {

val vAR_CSLAB_num = Set(5,6,9,20,30,45)

// find min and max of the elements

println( "Min element in Set(5,6,9,20,30,45) : " + vAR_CSLAB_num.min )

println( "Max element in Set(5,6,9,20,30,45) : " + vAR_CSLAB_num.max )

}

}

Demo.ELEMENTS_IN_SETS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_COMMON_VALUES_IN_SETS_V1

Purpose : A Program for Common Values in Sets in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 12:41 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Common Values in Sets in Scala

## Scala Development Environment & Runtime - Eclipse IDE, Anaconda, Jupyter

object Demo {

def COMMON_VALUES_IN_SETS (args: Array[String]) {

val vAR_CSLAB_num1 = Set(5,6,9,20,30,45)

val vAR_CSLAB_num2 = Set(50,60,9,20,35,55)

// find common elements between two sets

println( "vAR_CSLAB_num1.&(vAR_CSLAB_num2) : " + vAR_CSLAB_num1.&(vAR_CSLAB_num2) )

println( "vAR_CSLAB_num1.intersect(vAR_CSLAB_num2) : " + vAR_CSLAB_num1.intersect(vAR_CSLAB_num2) )

}

}

Demo.COMMON_VALUES_IN_SETS(Array(" "))

/*****************************

Disclaimer:

We are providing this code block strictly for learning and researching. This is not a production-ready code. We assume no liability for this code under any circumstance. By using this code, users assume full risk.

All software, hardware, and other products that are referenced in these materials, belong to the respective vendor who developed or who owns this product.

/*****************************

File Name : CSLAB_MAPS_V1

Purpose : A Program for Maps in Scala

Author : DeepSphere.AI, Inc.

Date and Time : 31/01/2019 12:57 hrs

Version : 1.0

/*****************************

## Program Description : A Program for Maps in Scala